Among other topics, Archer’s student handbook outlines community values, policies and expectations around academic integrity. The handbook’s definition of academic dishonesty has been expanded to reference artificial intelligence with the phrase, “plagiarizing or presenting another person’s work or ideas as the student’s own, including the use of artificial intelligence.” The use of AI has also been added to the examples of plagiarism provided in this section.

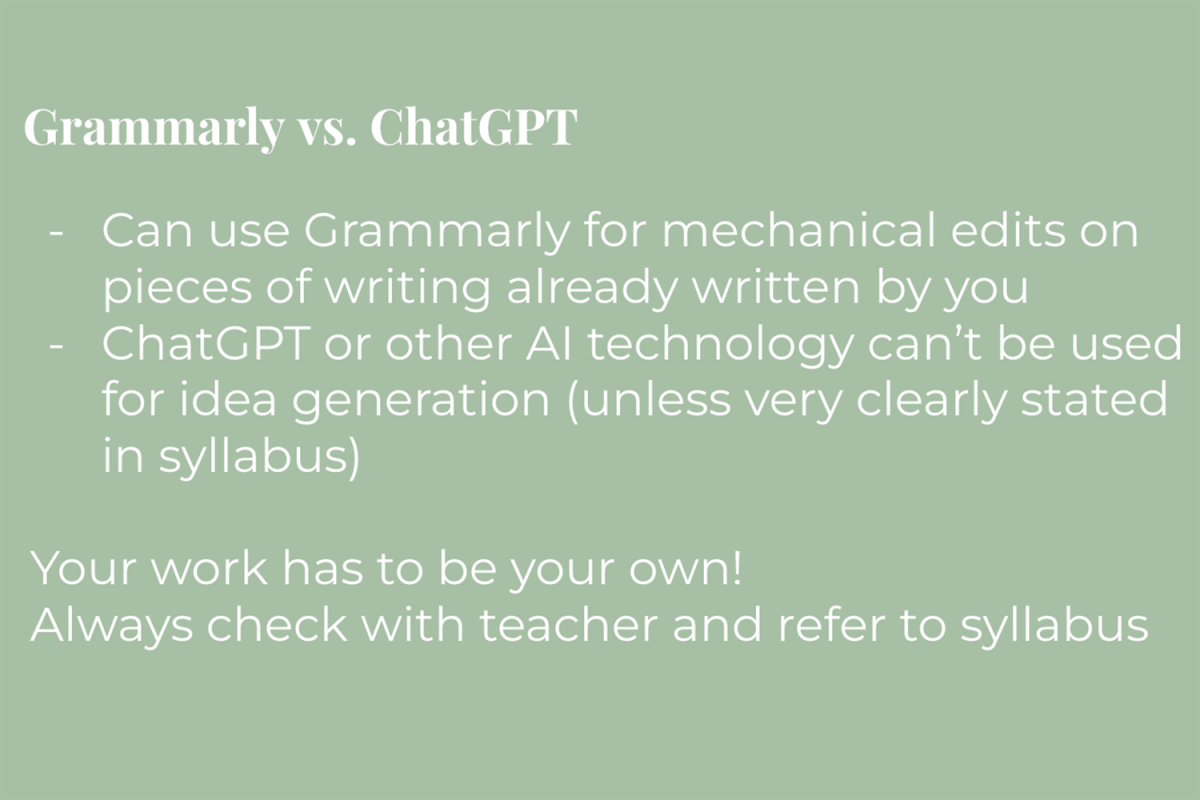

Honor Education Council representatives from grades nine through 12 presented at grade-level meetings Sept. 5 to clarify current expectations around AI. The presentations covered the handbook’s new phrasing about AI, Grammarly versus ChatGPT and how AI should be referenced in acknowledgements for academic assignments.

Presenters also encouraged students to check their class syllabi, to ask their teachers if they are unsure about policies and to trust themselves and their work. Senior Chair of HEC Alejandra Cortes (’24) helped organize these presentations.

“Our main goal was to just provide more clarity for the students so that we could minimize the amount of infractions this year since, last year, we saw a lot of plagiarism,” Cortes said. “We just wanted to express to students that if they are ever unsure about a policy, then they should go directly to the teacher because some teachers have different expectations about AI.”

HEC member Natalie London (’26) said the presentations were useful to synthesize and convey information — particularly about ChatGPT and Grammarly — to her grade. She also highlighted the importance of establishing ethical boundaries as a community.

“Not so much just as a member of HEC, but as a member of the Archer community, I think that it’s important that all the work that we make as Archer students is our own, for ethical, moral reasons that pertain to self-value,” London said. “It crosses into more [of] an ethical and moral boundary, and I feel like as an Archer community, we’re good at knowing where that line is.”

Cortes met with department chairs, including History Department Chair Elana Goldbaum, Tuesday, Sept. 12, to discuss expectations and ethics around AI in a classroom setting.

“My takeaways from the meeting with Alejandra and the other department chairs is a desire to have clarity about expectations for students about when and how they can use AI and also aligning with other teachers about how we plan on communicating that to students,” Goldbaum said.

Cortes said the department chairs are planning ways to further discuss and clarify their policies regarding AI with students.

“I think we identified that there still needs to be work done within the departments,” Cortes said. “For example, in English [classes], the main thing is that it comes down to specific assignments, and we just need to make it clear that a lot of AI platforms, including Grammarly, aren’t allowed most of the time because it can rearrange one’s writing, and that is a form of plagiarism based on the handbook language.”

HEC members held an open session Friday, Sept. 15, for community members to generally discuss the handbook changes and AI. According to Cortes, many students said they would like to have lessons about AI to help them better understand its abilities.

Additionally, Goldbaum emphasized the importance of being conscious of the ethical discussions around AI in different situations, including copyright and possible implications for art. She said the history department is considering how to utilize the new technology instead of banning it completely, and that teachers in the history department want to have an open dialogue about possible uses of AI. She also said standards and uses of AI will continue evolving.

“Some of our teachers are really excited to dive into using it on assignments to help deepen student thinking, but I think we also have to be cognizant of the ethical discussions out there,” Goldbaum said. “We want to be sure to empower students too that AI is not a source, just like Google is not a source itself, so they’re still developing skills to critically think about the technology that we are using. But I don’t think there’s an an adult, at least, that I know of, that’s like, ‘We’re never going to use it’ because it’s here, so we have to adapt, but it’s going to be a learning process for us all.”