At the beginning of the 2023-2024 school year, Archer’s student handbook’s section on academic dishonesty was updated to incorporate guidelines for artificial intelligence, saying academic dishonesty includes, “plagiarizing or presenting another person’s work or ideas as the student’s own, including the use of artificial intelligence.”

In light of this addition, our editorial board has thought about our publication’s practices with AI and how professional and scholastic journalism will be impacted by this technology.

Some say AI will soon replace human journalists thanks to its timeliness and efficiency. However, while AI can produce grammatically correct writing, it misses the creativity, critical thinking skills and introspection that comes with the human experience. For example, when the New York Times used AI chatbots to write short college essays for Harvard, Yale and Princeton, many of the responses were vague, generic and inaccurate, such as a response that used false song lyrics and incorrect interpretations.

According to the New York Times, Google is testing a “personal assistant” for journalists that uses AI to “take in information — details of current events, for example — and generate news content.” Now that AI tools are being tailored to journalists, the credibility of future reporting is even more worrisome. In the summer, a Georgia man sued OpenAI for defamation after ChatGPT generated a false legal summary accusing him of embezzlement. ChatGPT has also been known to create fake articles, where the program instead claimed they were real articles from The Guardian.

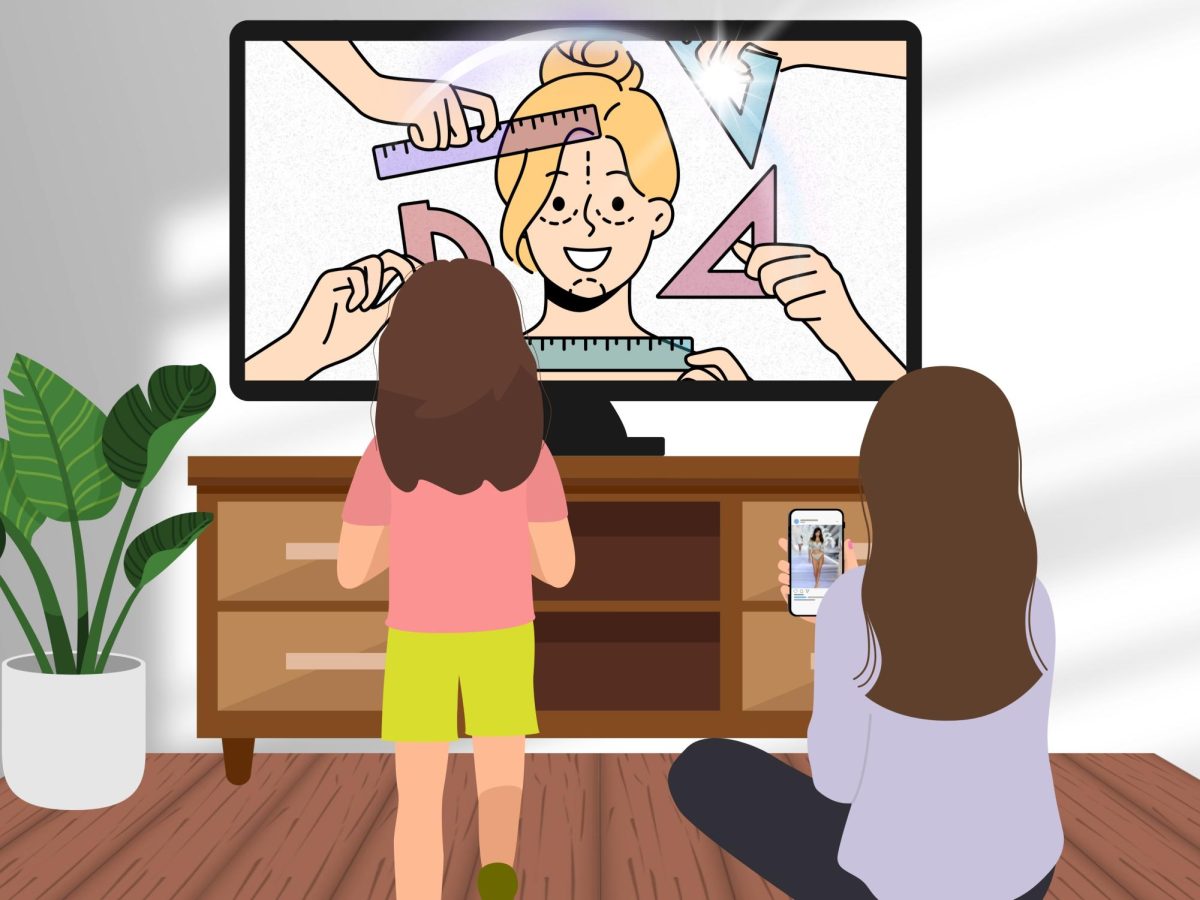

AI tools — when solely relied upon without fact-checking and ethical considerations — perpetuate the cycle of misinformation not only in articles, but also in photography. In The Oracle, we use Photoshop for cropping and adjusting light levels in images but always maintain the authenticity of the photo captured. However, as AI-generated images become more realistic, ethical dilemmas arise: these images are not newsworthy, and, if used for articles, would cause readers to gain a false perception of the world around them.

As our editorial board looks at these examples, we begin to wonder if AI can devalue journalism. What function does photojournalism have if it doesn’t capture the truth? What’s the purpose of journalism if it isn’t factual, credible or reliable? Is there a point to storytelling if it doesn’t represent and resonate with the human experience?

However, there are also some benefits of the intersection between AI and journalism. With AI, articles can be translated into multiple languages or converted into text-to-speech, while audio content can be transcribed, making news more accessible. AI can also find and analyze patterns of bias in headlines and stories, which can help publications be more objective in their reporting. Today, some news publications, including Sports Illustrated, have already begun using AI to help produce full articles, where the information is based on decades of previous Sports Illustrated articles.

But if news articles are sold to improve AI technology, can journalism still be considered independent? Trustworthy?

There are still many unknowns about the future of AI technology. Similarly to writers’ concerns regarding AI in the entertainment industry, some journalists are fighting for job security against AI. Maybe with time, research and a greater application of ethics, professional news publications will find the best practices to operate alongside this new technology.

As of today, we, the student editors of The Oracle, know it can only be us who tell the stories and opinions of the students in our community in a way that is original, truthful and impactful.

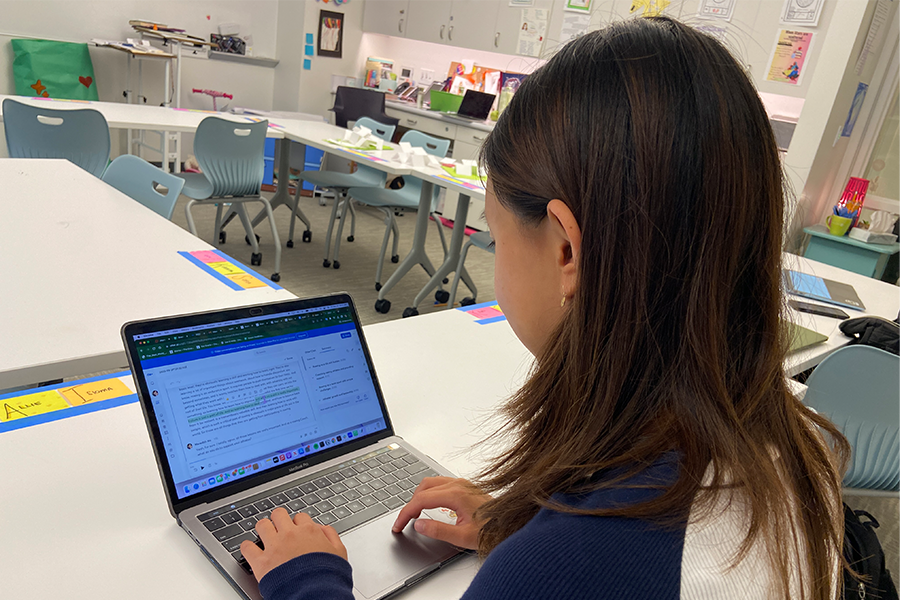

The Oracle does not use AI chatbots like ChatGPT in any form. We only use Otter.ai, a voice-to-text that utilizes AI, for interviews. However, we always listen to the recorded audio and edit the transcription to ensure the quotes are accurate. Additionally, in WordPress, the site where we write articles, some students use Grammarly for spelling and minor grammatical edits, though this is not an overall policy for the publication.

The brainstorming, gathering of sources and information, fact-checking, writing and editing of articles are completed by our staff of student journalists with the assistance of our adviser Kristin Taylor. In the coming weeks, The Oracle will be updating our staff manual and editorial policy to state and clarify our uses of AI.

In our digitizing world, interaction with AI will be inevitable. Nevertheless, we believe journalists should prioritize their own brainstorming and writing capabilities and use AI only for transcription and minimal editing help. With these guidelines, strong ethics and clarifications of our policy, The Oracle will continue maintaining its journalistic integrity and amplifying students’ authentic voices.

Jason • Feb 5, 2024 at 9:29 am

GPT-stlye Language models do not tell, lie, or inform. They predict how a human would respond and generate text accordingly. They do not value truth, accuracy, sensitivity, or the rights of those who own the training data. ChatGPT can’t even play chess properly.

People do not understand these points. They believe that AI is infallible, hoping to use it as a replacement for ever-distant gods and untrustworthy publications. They don’t understand how their tools work, and they don’t care, so they rely on LLMs to do what they can’t do.

We need to teach people that they should tear apart their stuff and understand how things work.