In the past decade, artificial intelligence has advanced rapidly, from self-driving cars to readily available chatbots on devices. According to Morgan State University, the development of AI brings efficiency to our lives and the workforce, including journalistic practices.

In recent times, AI has begun to transform the way news is produced, distributed and consumed. It assists in time-consuming tasks such as language translation, summarizing and transcribing. This allows journalists to focus on more complex and investigative reporting. For example, The New York Times utilizes AI tools to create initial drafts of headlines and summaries and even helps investigative journalists scan satellite imagery for bomb craters, which they then review manually.

Although AI can aid journalistic practices, there are ethical implications to consider. In 2023, Maggie Dupre from Futurism found that Sports Illustrated published AI-written pieces by make-believe journalists, which shocked the public. Her reporting later led to the discovery of an increased presence of AI-generated articles in other major news outlets. Head of Scholastic Journalism Kristin Taylor said she is skeptical about the usage of AI in journalism.

“AI is great for categorizing or summarizing, but I would never want to use AI to write part of my article or to even write my caption. How does AI know what’s in that caption? AI’s brain is created from what is fed to it from the internet, and the internet can be a pretty awful place,” Taylor said. “I’d be really curious about how they are balancing their code of ethics with their decision to start integrating AI. What are the parameters? How are they ensuring it’s accurate? I want to know more. I always seek to understand first, but as a gut reaction, that makes me sad.”

Technology columnist Allie Yang (’25) said a common misconception about AI, particularly Chat GPT, is that it serves as a reliable research tool that compiles accurate information. However, a 2023 study conducted by NewsGuard found that when ChatGPT-3.5 was tested with a series of prompts, it generated misinformation in 80% of its responses. Yang said misinformation produced by AI during an era of extreme political and social division would perpetuate further conflict and misunderstanding.

“I think AI kind of produces what we want to hear. It’s made with a lot of human biases in mind, whether that’s left or right, and I think because of that, the extremism is going to shift a lot more. If people don’t take into account that the AI is not this impartial source, I think people are going to be swayed to be a lot more polarized,” Yang said. “That’s what’s easy for people to do nowadays — is to be very stringent on one side and very vehemently opposed to another.”

To combat the polarization AI has contributed to, Yang suggested seeking out sources that oppose one’s views to gain a balanced view of the full picture.

“A lot of us are more polarized than we want to admit to ourselves … Those barriers of disagreement might be more easily broken than you might think,” Yang said. “I also think we should be careful about who is using AI and when. We’re not at a stage yet where AI is common in journalism. We’re still at a stage where the New York Times adding [AI] was this huge thing, and so watching out for those developments is going to be key as well.”

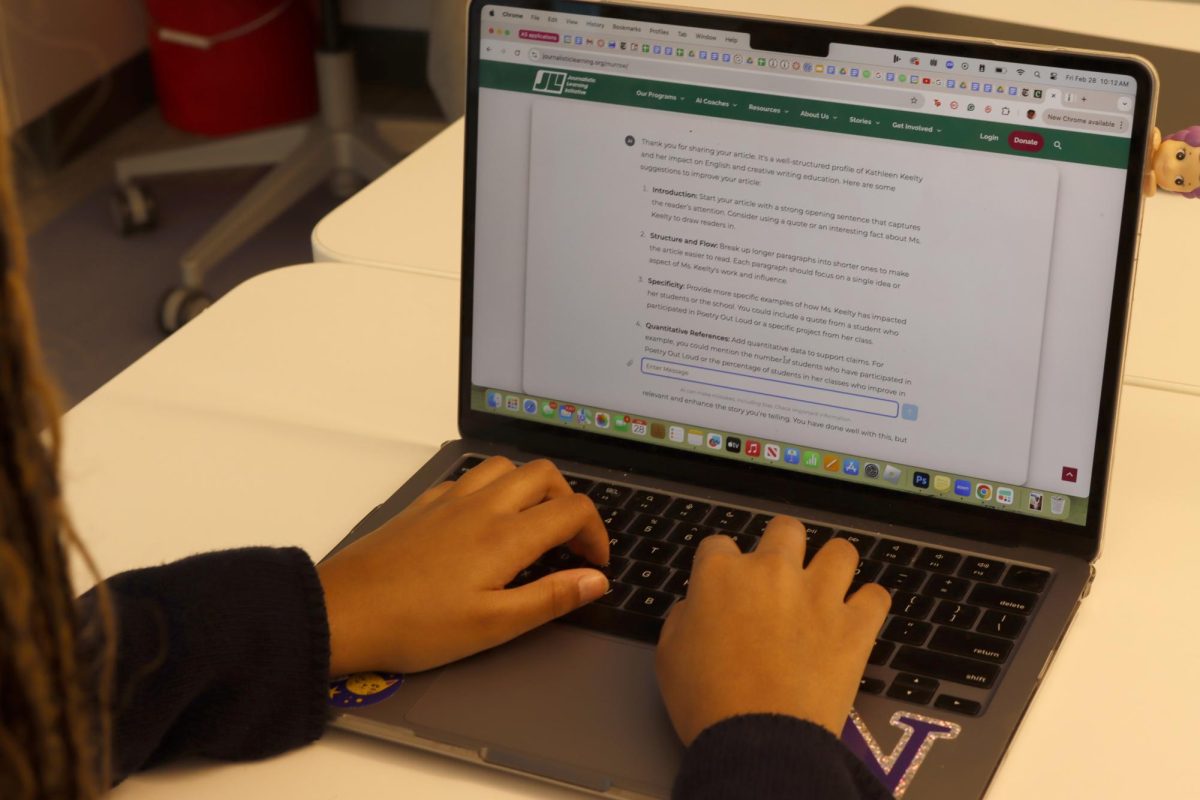

The Oracle Editorial Board’s AI policy states, “aligned with our commitment to journalistic integrity and amplifying students’ authentic voices, The Oracle does not use AI chatbots, including but not limited to ChatGPT, in any form.” The board permits the use of Murrow AI, a coaching tool that supports reporters by guiding them in developing interview questions and story ideas.

“It coaches more than it replaces, and I think that type of AI is great because what you’re doing there is having an extra coach,” Taylor said. “It doesn’t replace an editor, it doesn’t replace me as a teacher, but it is an additional coach that doesn’t do it for you.”

In an NPR article, Harrison Dupre said AI will become increasingly integrated into our lives. However, when it comes to reporting, she said AI lacks the human perspective and creativity necessary for quality reporting.

“As a journalist, part of what you do when you report a story is you go out and have lots of conversations, and you’re learning what the story is really about. So AI doesn’t know how to take an angle if it doesn’t understand how you frame a story, [which] impacts how the audience hears that story and understands that story. We do, as humans, understand that,” Taylor said. “So we have our biases, and we have to always be aware of them and be thinking about them, but at least we are consciously thinking about that and not having [a] machine do it for us.”