Disclaimer: This article discusses mature and sensitive themes and terms that may not be suitable for younger readers.

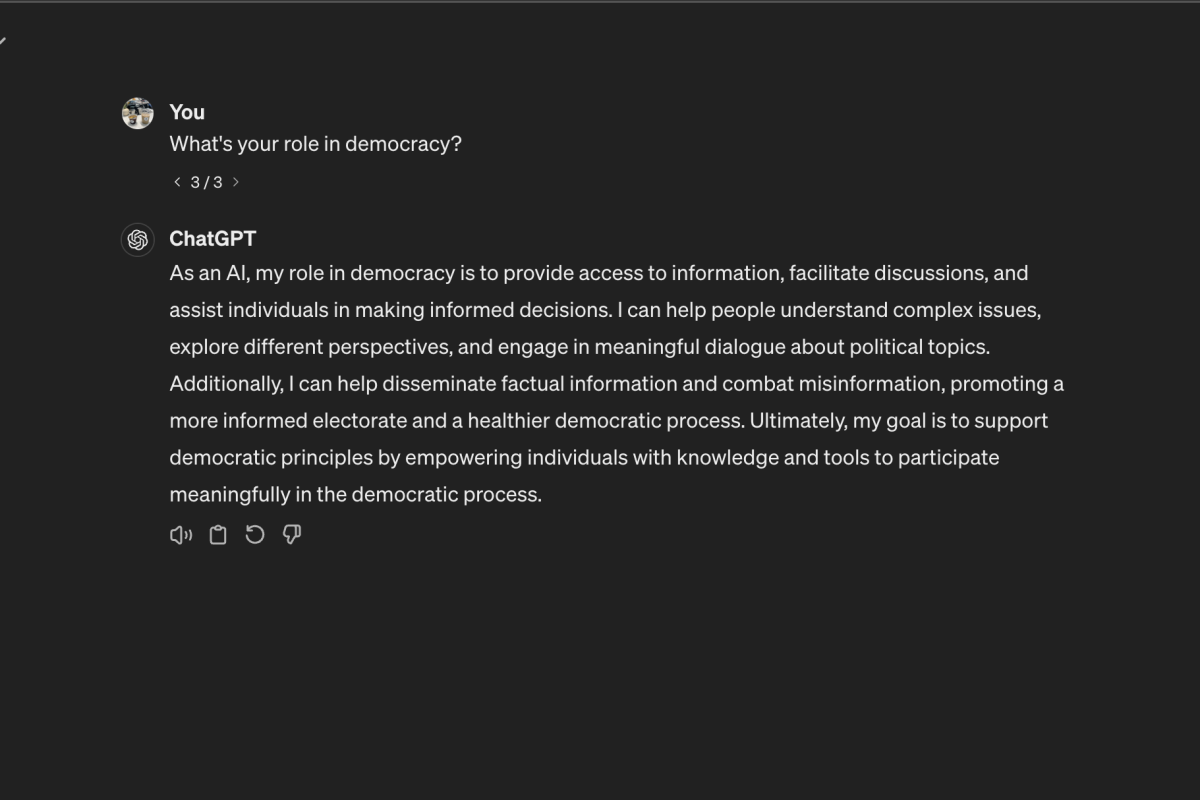

It’s like slang, but not quite. Politically rooted, Algospeak has gradually become a new dialect of mainstream social media. Combining the term “algorithm,” which refers to social media’s content filters, and “speak,” the term was coined during the COVID-19 pandemic, when the online world seemed to be the main gateway for public self-expression. The purpose of this makeshift dialect is to evade social media platforms’ moderation regulations.

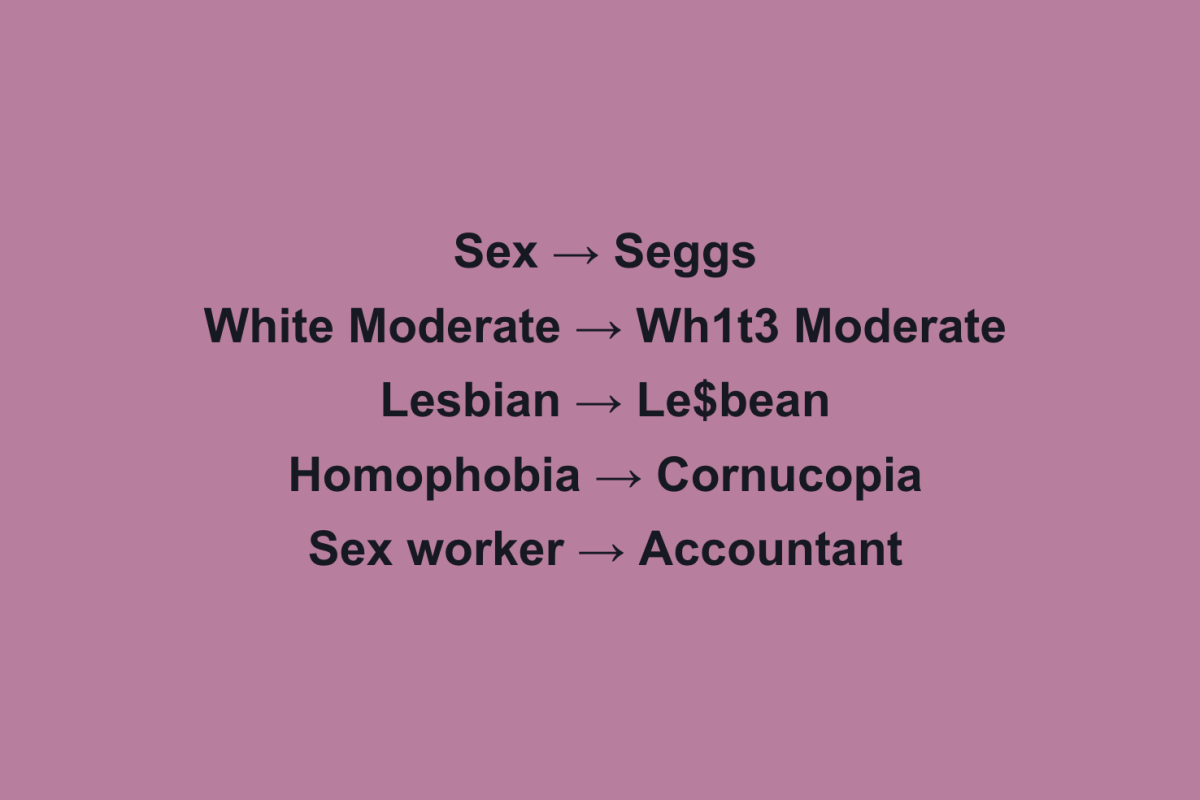

This “language” consists of English words that include numbers, symbols or letters substituted in arbitrary places to effectively create “new” words. For example, “lesbian” becomes “le$bean,” “Ku Klux Klanner” becomes “Ku K1ux K1ann3r” and “sex” becomes “seggs.” Other Algospeak words are even more conspicuous than their standard English counterparts: “Dead” becomes “unalive,” “homophobia” becomes “cornucopia,” “abortion” becomes “camping” and “sex worker” becomes “accountant.”

Notice the pattern?

Creators tend to employ Algospeak when the topic is related to something “controversial,” such as race or gender identity. Content that discusses, educates and persuades on such topics is rumored to be disproportionately susceptible to ban, which is why creators turn to Algospeak to maintain their internet presence while escaping censorship risks.

Content creator Kahlil Greene, for instance, who posts educational videos about race, lectured on Dr. Martin Luther King Jr.’s “Letter From Birmingham Jail,” using real quotes from the letter. To counter potentially being “shadow banned” — the alleged practice of videos being suppressed from people’s view because of their controversial nature — Greene had to use Algospeak, for example, changing “white moderate” to “wh1t3 moderate.”

This TikTok was not Greene’s first use of Algospeak: He noted in a New York Times that as a whole, content regarding Black history is often strictly controlled and less popularized by the algorithm, which wastes creators’ time spent scripting, filming and researching. His mounting exasperation with the platform’s moderation habits prompted him to use Instagram instead. As a full-time content creator, his livelihood depends on video viewership, so strict moderation can have grave consequences for s as fundamental as his income, meaning he needs Algospeak to stay on TikTok.

It’s not only the videos themselves being affected, but their comments as well. For instance, earlier this year, the official TikTok account of the Human Rights Campaign was temporarily banned for using the word “gay” in a comment. While reversed and deemed a careless error by TikTok, this instance demonstrates the overbearing, discriminatory and homophobic nature of TikTok’s moderation, especially on content that serves and shares the stories of historically marginalized demographics.

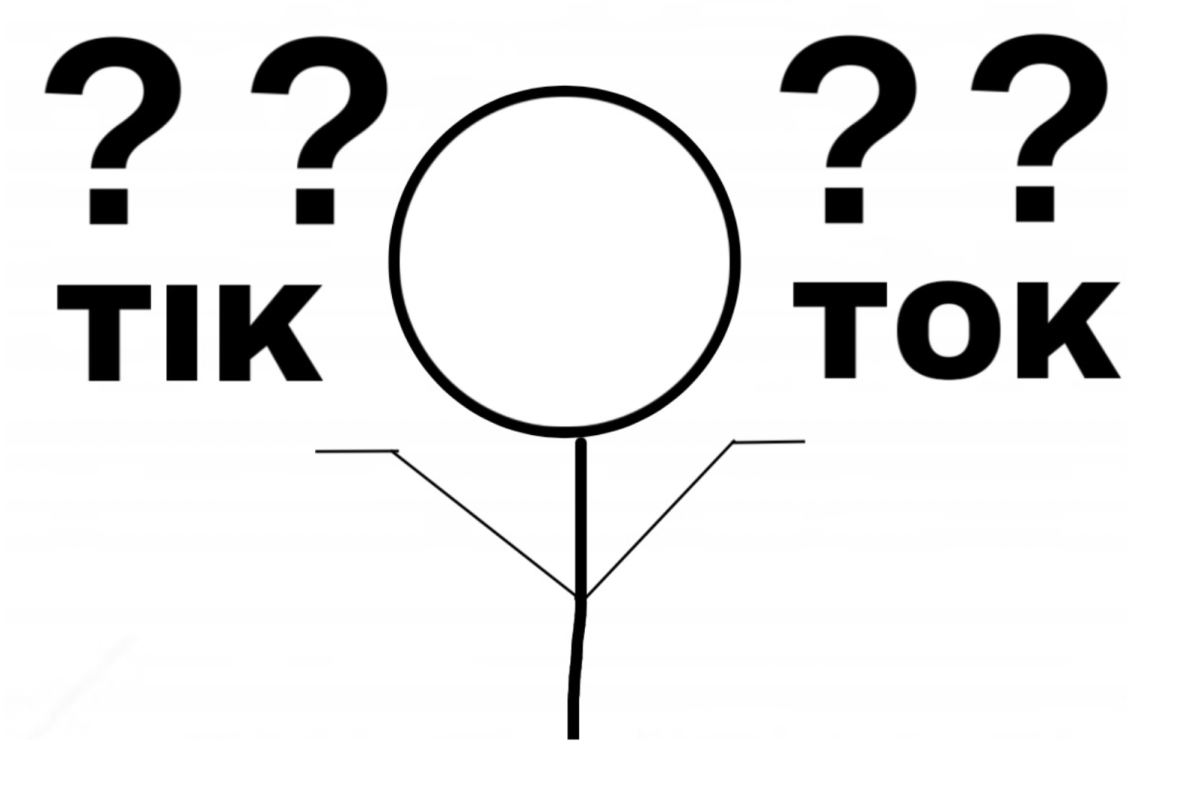

The underlying complication of this problem, however, is that users can only speculate the real, reasons for being banned, as, according to TikTok’s policy, moderators are only obligated to give vague reasons like “this comment violates our Community Guidelines.” According to TikTok’s website, these guidelines are meant to “empower [the] community with information” and maintain TikTok’s goal of not “[allowing] any hateful behavior, hate speech or promotion of hateful ideologies.” This means that conversations surrounding censorship must be fueled by ethical dialogues with equity at the forefront to prevent hate groups such as the KKK from having their voices amplified.

Since the overturning of Roe v. Wade in 2022, studies have been released demonstrating that “more than half of Americans say they’ve seen an uptick in Algospeak as polarizing political, cultural or global events unfold,” and “almost a third of Americans on social media and gaming sites say they’ve ‘used emojis or alternative phrases to circumvent banned terms.'” On TikTok itself, 113 million videos have been banned from April to June of this year alone, and of these, 48 million were taken down by automation. This means that approximately 40% of TikTok bans lack critical thought or evaluation and are instead attempting to blindly follow restrictions set by the company.

Users must recognize Algospeak as more than a humorous combination of word substitutions and instead see it as a greater sign of censorship and its influence. Social media platforms like TikTok are worldwide gateways of opinion and expression, especially for groups outside of the dominant culture; we cannot lose their essence and purpose to one-sentence content bans.

Ella Dorfman • Feb 20, 2024 at 6:23 pm

SO GOOD!